4/23/2020:

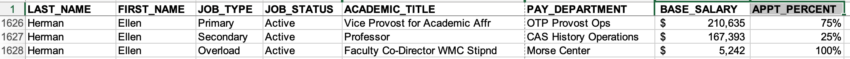

Another year, another budget crisis, more questions about where UO’s money is going. I emailed VP Herman, who is charge of this project, on March 25th:

Hi Ellen,

I’m heard a rumor that the administration has abandoned or perhaps just delayed this effort. I’m hoping that you can provide some details on where this proposal currently stands. Thanks,

Bill Harbaugh

She didn’t answer, so on April 1st I filed a public record request. Yesterday I got this response:

Dear Mr. Harbaugh,

The University has searched for, but was unable to locate, records responsive to your request for “…a public record showing the current status of the Faculty tracking / Insights project”, made 4/1/2020.

It is the office’s understanding that this project has been placed on hold, however there are no records documenting this decision.

The office considers this to be fully responsive to your request, and will now close your matter. Thank you for contacting the office with your request.

Sincerely, Office of Public Records

5/8/2019 update:

With the budget crisis, you’d think this proposal would be in the trash can. Apparently not.

3/18/2019 Faculty tracking software vendor explains time-suck & “thought leadership programming” junket

So why isn’t the provost’s office being clear about what this will cost?